Faraday Cage installation by Sue Anne Rische, from her solo exhibition “Intelligence: An Art Show About Data Collection” (2020). Photo by Colette Copeland

Note: for numbered references contained in this interview, please see **notes at the bottom of this page.

Sue Anne Rische’s solo exhibition, ‘Intelligence: An Art Show About Data Collection’ is on view at The Art Gallery at Collin College, Plano, through March 18.

Sue Anne Rische was one of the first people I met when I moved to Dallas in 2011; it was during a great drought. My first impression of the area was bleak (it had not rained in almost a year), but Rische’s warm welcome, along with her intellect, artistic pursuits and unusual hobbies, drew me in. Rische is a Professor of Art at Collin College, Plano, and has exhibited locally and internationally, in Japan, the Netherlands, Spain, and England, among other places. I wrote about her 2014 Collin College exhibition, Privacy World, for both Arts and Culture Texas and Eutopia.

Rische’s ongoing investigations into privacy reveal Orwellian prophesies that have come to pass, as well as how our online data is bought and sold without our consent as we happily post our lives on social media. To quote Rische’s artist statement, (which quotes the song “We Want Your Soul” by Adam Freeland):

“Go back to bed America, your government is in control again

Here. Watch this. Shut up

You are free to do as we tell you

You are free to do as we tell you (we want your soul)”

Installation view of Sue Anne Rische’s “Intelligence: An Art Show About Data Collection” (2020). Work is activated by a camera flash. Image courtesy of the artist.

Colette Copeland: When and how you became interested in the topic of privacy?

Sue Anne Rische: If I move backwards in time from my first show about privacy in 2014, I’d say that puts the birth of my interest in privacy in 2012, which is when I joined reddit. Privacy issues often made the front page, so I began diving into the privacy subreddit.[**1] I am also a fan of futurology and read up on their predictions and warnings. An avid fan of sci-fi, I find that science fiction authors often base their dystopian worlds and technologies on our own developing technologies. They tend to see the writing on the wall.

CC: We’ve known each other a long time, so I am opting for full confession here. When you first started this privacy research, dare I say obsessive privacy research, I thought you sounded like a paranoid conspiracy theorist. Now many of your early concerns have come to pass. What have been the major revelations for you on this topic in the past eight years?

SR: I get that a lot, tin foil hat and the like, but the New York Times currently appears to be releasing a privacy related article nearly every day. Rather than feeling vindicated, I’m not happy that these issues are real. In fact, I’m relatively certain that all of the privacy related topics I had talked about eight years ago were already taking place. The parties that employed the technology to spy on our daily habits were simply careless with our data and that ends up making the news. They are not sorry about doing it, they are only sorry about being caught. However, I am happy that it’s been made more public because we need to be angry about it and we need to protect ourselves.

I guess one of the bigger controversies that made the news was the Facebook–Cambridge Analytica data scandal in 2018.[2] If you gave up on the news after 2016, I’ll summarize it as best as I can. Thanks to a whistleblower, we learned that Cambridge Analytica, a data brokerage firm, harvested Facebook users’ data without consent. That’s 50 million users. The data that Facebook users inputted gave CA enough information to profile each user’s location and their political leanings so that it could target them with ads and motivate them to attend political gatherings. It was discovered the CA worked for Ted Cruz’s and Trump’s campaigns. All of those “What Hogwarts House do you belong to?” quizzes and “Who’s your celebrity doppelgänger?” prompts are ultimately created to collect data on you. Same for “likes” on Facebook, twitter, reddit, instagram, etc. There was also that time that Facebook studied and observed the emotions of teens, collected the psychographic data, and sold it to a third party, turning minor’s emotional states into a commodity.[3]

More recently, facial recognition cameras are in the news. Rather than overwhelm you with all of the ways this technology has been misused already I’d like to point to where the AI was trained: from images scraped from the internet without your consent. Your images. And guess what it was used for — to train facial recognition software in China. And what’s China doing with it? They’re using it to force people to unlock their phones, to publicly shame people wearing pajamas[4], to instantly fine jaywalkers[5], to identify and roll up protesters in Hong Kong[6], and to imprison minority religious groups.[7] Your faces. Without consent.

Another artist I admire whose topic is privacy, Adam Harvey[8], is focusing on facial recognition right now. You can see what he’s up to on his site: megapixels.cc. The project Microsoft Celeb Dataset is a collection of celebrity faces used to improve AI software. Here the term “celebrity” has been stretched to include a cryptologist, a digital rights activist, and your friend Jill Magid, an artist who questions systems of power through her work. For some strange reason these people’s images were scraped into this dataset. Also included are Ai Wei Wei[9] and Trevor Paglen, two more artists who are asking powerful entities uncomfortable questions. One of the big fears of identifiable data falling into the wrong hands is that it would be used against minority groups, dissidents, and whistleblowers. I believe this has been proven already.

CC: In the article I wrote in 2014 about Privacy World, I cite how your project emphasizes “the current public’s lack of discernment about sharing private information over social media, allowing companies to use their data as a marketing tool and our overall ignorance and/or apathy about our total loss of privacy and control.” In the last six years of multiple government and corporate security breaches and prolific computer hacking, clearly privacy should be even more of a concern. Yet the vast majority of the public doesn’t seem to care. Shouldn’t it freak us out that our phone knows where we are at all times and even where we are supposed to be? What do you attribute to this apathy?

SR: There is a popular phrase uttered when privacy advocates try to raise awareness and talk about what could go (and is going) wrong with the unregulated collection of our data, and that is: If you’ve got nothing to hide then you’ve got nothing to fear.[10] And while Edward Snowden’s retort feels overused it’s probably because it’s such an appropriate way to frame his position: “Arguing that you don’t care about the right to privacy because you have nothing to hide is no different than saying you don’t care about free speech because you have nothing to say.”[11] To those who say they have nothing to hide, go ahead and get their SSN, their address, bank accounts, birthdate, driver’s license number, all of their passwords, put cameras in their bathroom and bedroom, have keys made to their house or better yet remove all of their doors, and make a single website devoted to sharing all of their information. If I sound like an asshole here, this is information about you that is for sale on the dark web. SSNs sell for $1.00. Shopping sites, social media sites, and even government sites[12] don’t know how to protect your data, either. If you think your data is safe on any website or cloud, you might want to do some research.

Many people are finding themselves shocked that words scribed in Orwell’s Nineteen Eighty-Four[13] and Huxley’s Brave New World[14] are ringing true, but I think Huxley got it right as to why we are so apathetic: we are too entertained to care. There is a privacy cost-benefit analysis that we undertake every time we click “I understand” or “I agree” to data cookies and license agreements. Privacy is an abstract idea that we shove in a box and store in the attic when the immediate gratification and dopamine release from Pokemon Go calls to us. It’s only when our data gets misused, taken out of context, or used against us that we care, and by that time it’s already too late.

What we don’t get to see is the profile built out of our own data and how frighteningly accurate it is. There was the time that the pregnant teen (who didn’t realize it) had Target ads for baby gear directed at her father… because she started buying unscented products.[15] They have enough data to predict our emotions and behavior. That, together with facial recognition software that has also been trained to detect our emotions reminds me of “thoughtcrime” from Nineteen Eighty-Four and of the precogs who predicted crimes before they were committed in Philip K. Dick’s The Minority Report[16]. Yeah, yeah, solving crime is good but in my opinion, there is too much misuse of the data and too many easily and falsely accused to just roll out the technology without the proper protection in place. That’s what’s happening. Not to mention that privacy simply shouldn’t be sacrificed for security.

CC: Privacy World invoked parody and humor as a strategy for engaging the viewers. In your current exhibition, Intelligence, the tone is darker and more somber. Viewers are asked to willingly get into a cage to protect their privacy, thus imprisoning them from the Big Brothers of corporate megalomania and government, as well as themselves. The white-on-white vinyl wall graphics are subtle — almost invisible — and require viewers to actively engage their camera’s flash to make the data visible. What are the specific components of the exhibit? What is the shift in conceptual strategy and tone?

SR: I don’t like to be “preachy” about anything, particularly privacy. That’s when people say “tin foil hat!” and stop listening. But the humor in Privacy World did get the message across. One of my clearest memories of the show was in hearing from a person who made her way through my “privacy carnival.” At the end of the show told me she felt sick [after discovering all of the ways that data was being collected and used].

I had six months to prepare for Privacy World and that gave me a lot of time to brainstorm and develop the ideas. This time around I had three months to prepare and had to come up with an idea quickly. This is the third time I’ve shown in this gallery. It’s essentially a giant forty-foot cube. My most recent work has been in the form of handheld fans that I made at a couple of Japanese residencies, but there’s no way my small fans, made to hide one’s face from surveillance cameras, could fill that much space in such a short amount of time. I needed something large and impactful, so once again I turned to the installation format.

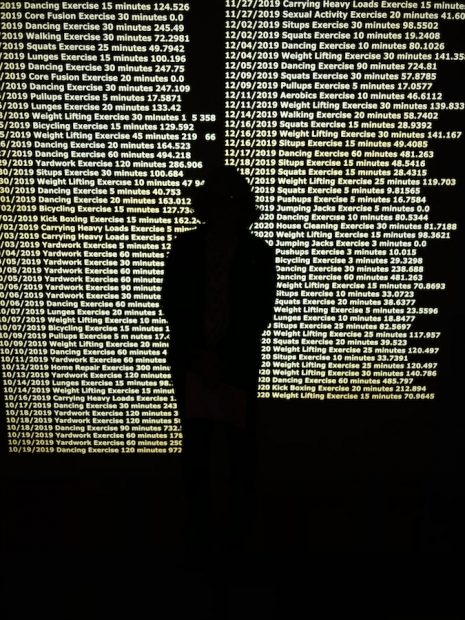

This show consists of four components: the data-blocking faraday cage in the middle, hidden text and images on the walls, a sound piece, and the alteration of the temperature in the gallery. The data and imagery for the walls is based on information I received from a private investigator and an attorney whom I hired to dig up what they could on me from the internet. I [also] requested the data collected on me from Google, Facebook, Niantic, and Spotify, and downloaded it. In addition, a marketing professional did an analysis on a half mile radius of where I live.

I’d like to highlight two of the subtler works in the show: the sound piece and the temperature piece. The sounds are a collection of short observations made by friends about their loved ones. I asked for covertly whispered descriptions of mundane activities. I love the results of these sound bytes. While I didn’t ask for it, people included their own interpretations of their spied-upon victims’ everyday actions. It’s interesting that when we ourselves are put into the role of spy, we automatically assume that our marks are doing something suspicious.

The most ethereal piece appearing in this show is my manipulation of the temperature; the gallery is cold, while the faraday cage is warmed by heat lamps. Viewers and readers are encouraged to offer additional interpretations, but the main drive of this piece is to highlight the “chilling effect.” When a person is watched, their behavior changes. When verbal discourse is recorded, one edits what they say. When people learn that their search terms are logged in Google’s search field, they are less likely to search for topics that will collect data that could harm them in the future. (Think medical conditions and questions about sexuality.) The chilling effect is prohibitive to journalists, dissidents, and whistleblowers. Relief can be found within the confines of a warm Faraday cage.

Somber is a good word to use for this show. Perhaps the level of “heartedness” (light or heavy) in my work comes from the way I am feeling at the time. A little humor may creep into some of the text I choose to reveal on the walls and into some of the audio files that will quietly play in the background, but this show is no laughing matter. The cage and wall pieces have surprises to reveal that are both beautiful and terrifying. I desire quiet contemplation from the viewers, but I also conscript them into a player in my own game. I often view my work as incomplete until a viewer engages with it.

CC: In your own life, you have conspicuously tried to stay off the grid as much as possible, including avoiding social media and protecting your privacy. I noticed that with this show, you have put yourself out there. What are your concerns about compromising your ideals for the sake of the art work and your own security?

SR: Digging up data on myself has both troubled me and set my mind at ease. Because I started taking measures to conceal my data through the use of Firefox, Duck Duck Go, and a VPN, there is less data collected on me than on most. I have the aforementioned reddit account which I mostly use for lurking under an assumed name. I’ve been on Facebook for twelve years and did a soft quit for about six of those. I only rejoined to start promoting a book I wrote at the behest of my editor and coach. At that time, I joined Twitter for the same reason. While I use it primarily to self-endorse, I still can’t help but to click an occasional thumbs up icon and leave a cheerful or funny comment.

For this show, I finally caved to Instagram and Linkedin. I must admit I died a little inside in the same way I did when I shoved a screaming, angry part of me into a tightly corked bottle so that I could justify playing Pokemon Go. I am aware of the irony in using the aforementioned social media platforms. And here comes the privacy cost-benefit analysis. In using this technology to promote a show about privacy, I have to give up some privacy. Which is more important: allowing my supposedly identity-scrubbed data to be sold off to some third party to create an emotional and behavioral profile on me to unknown ends, or exploiting an opportunity to garner attention from the art world, thereby furthering my own personal goals and voicing important concerns? I’m sacrificing the abstract to gain something physical and real, and in doing so, perhaps I will reach the minds of the right people who can help make the changes in privacy policy that I desire.

Sue Anne Rische’s ‘Intelligence: An Art Show About Data Collection’ at The Art Gallery at Collin College, Plano, through March 18.

Artist Lecture: Thursday March 5, 1-1:45 PM, and a closing reception 4-7 PM.

**Below are notes (hyperlinks) pertaining to the contents of the interview.

1. https://old.reddit.com/r/privacy/

9. https://www.theguardian.com/artanddesign/2011/apr/04/ai-weiwei-missing-chinese-police

10. https://en.wikipedia.org/wiki/Nothing_to_hide_argument

11. https://www.reddit.com/r/IAmA/comments/36ru89/just_days_left_to_kill_mass_surveillance_under/

13. https://en.wikipedia.org/wiki/Nineteen_Eighty-Four

14. https://en.wikipedia.org/wiki/Brave_New_World

16. https://en.wikipedia.org/wiki/The_Minority_Report

1 comment

Another aspect I mentioned back in 2007 on my old blog has to do with the “semantic web” and how the Cloud could give a centralized authority the ability to completely and virtually undetectably re-write history. (Fwiw, back then, the Cloud was an obscure idea referred to as “the Worldbeam.”)

Re- the “nothing to hide means nothing to fear” meme, I’d like to add Daniel Ellsberg’s point that the Stasi didn’t need to know about something you’d done wrong, all they needed to know was what you cared about. You were looking for a better job than the one you have now? You wanted your kid to get into a good school? That kind of info was enough to give the Stasi the power to make decent people do things they might never otherwise have done.

A balance of power requires a balance of info.

We’re at a critical juncture in human history, and few people either in power or out seem to understand the likely consequences of the choices we’re making. I’m excited to see Sue Anne’s show.