Gizmodo published an entertaining and thought-provoking interview today with two contributors to the new book Robot Ethics 2.0: From Autonomous Cars to Artificial Intelligence published by California Polytechnic State University. The whole book tackles thorny (and some mind-boggling) issues of humanity’s developing relationship with and dependance on artificial intelligence. Will Bridewell and Alistair M. C. Isaac are robo-ethicists who co-authored the section “White Lies and Silver Tongues: Why Robots Need to Deceive (and How).”

In that section (via Gizmodo), “They argue that deception is a regular feature of human interaction, and that misleading information (or withholding information) serves important pro-social functions. Eventually, we’ll need robots with the capacity for deception if they’re to contribute effectively in human communication… .”

The interviewer begins his questioning from the angle of cautionary tales found in popular science fiction, and the authors are able to clearly explain the behavior of Ash in Ridley Scott’s Alien, R2D2 from George Lucas’s Star Wars, and HAL 9000 from Stanley Kubrick’s 2001: A Space Odyssey. Turns out there are pretty good robo-reasons that these machines behave they way they do, however seemingly pathologically (and it turns out they are not pathological).

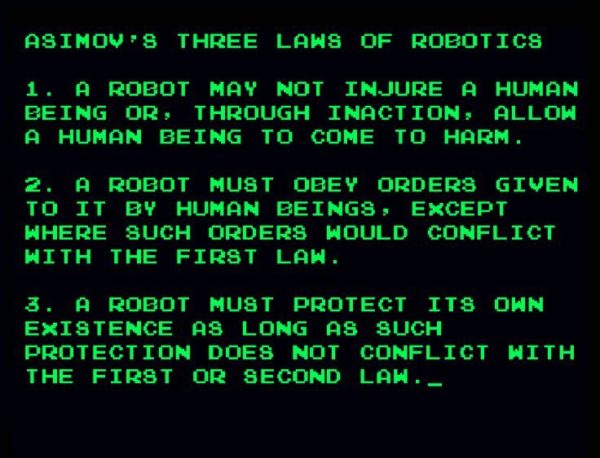

In subsequent questions, they get into impression management, The Scotty Principle (and why it works), the representational theory of mind, and they explain: “Because the jury is still out on whether robots can ever ‘have’ emotions, it is safe to say that any robot that can signal human-like emotions would be deceptive, especially if it could differentiate between human and robot emotional content.” They explain the ethical parameters of Asimov’s Laws and posit that when developing robots capable of deception, we should start there.

Read the whole interview here.